Monitor performance, detect drift, and catch production issues before they impact users. Openlayer offers a modern monitoring layer designed for real-world ML systems.

Model monitoring tools

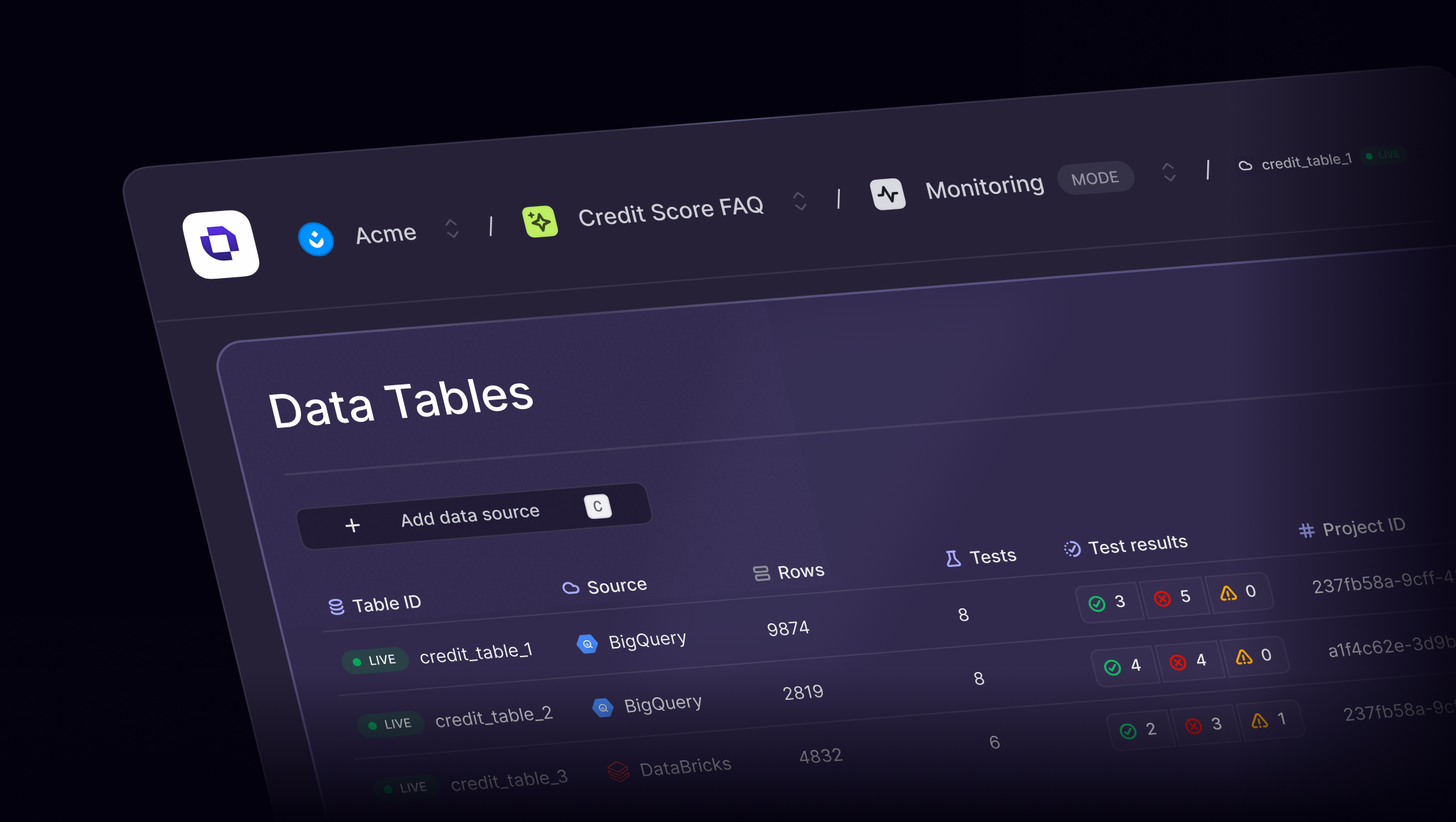

Model monitoring that goes beyond dashboards

Why model monitoring still matters

Production models don’t fail loudly, they fade quietly

Even well-trained models degrade over time. Upstream data changes, shifting user behavior, or infrastructure issues can silently erode performance.

That’s why model monitoring is a key layer of any resilient AI system.

Openlayer's approach to model monitoring

A monitoring layer that's flexible, observable, and reliable

Where monitoring fits in the lifecycle

Not just post-production, but part of a test-first workflow

Openlayer integrates monitoring into a broader evaluation lifecycle—from pre-deployment validation to post-deployment oversight.

Less firefighting

More control

Faster iteration

Why not just use a dashboard?

Dashboards show you what broke. Openlayer shows you why.

You don’t need more alerts, you need insight. Openlayer connects model failures to grounded measurements.

Input drift

Failing test cases

Version regressions

Who it's for

Designed for ML engineers and platform teams

If you’re responsible for model reliability at scale, Openlayer’s model monitoring layer fits your workflow. Whether managing a handful of models or hundreds, observability should be automated—not ad hoc.

FAQs

Your questions, answered

$ openlayer push