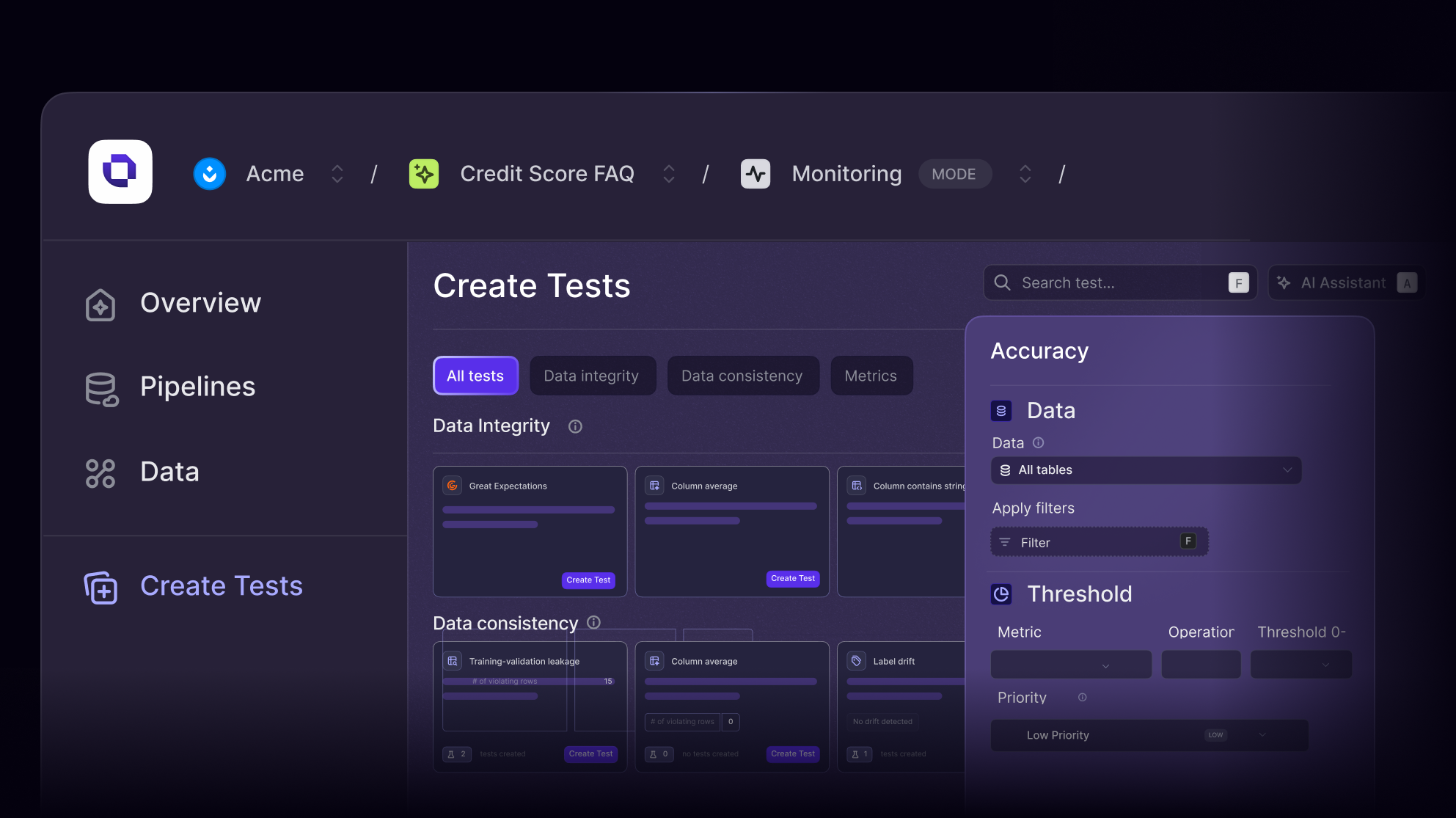

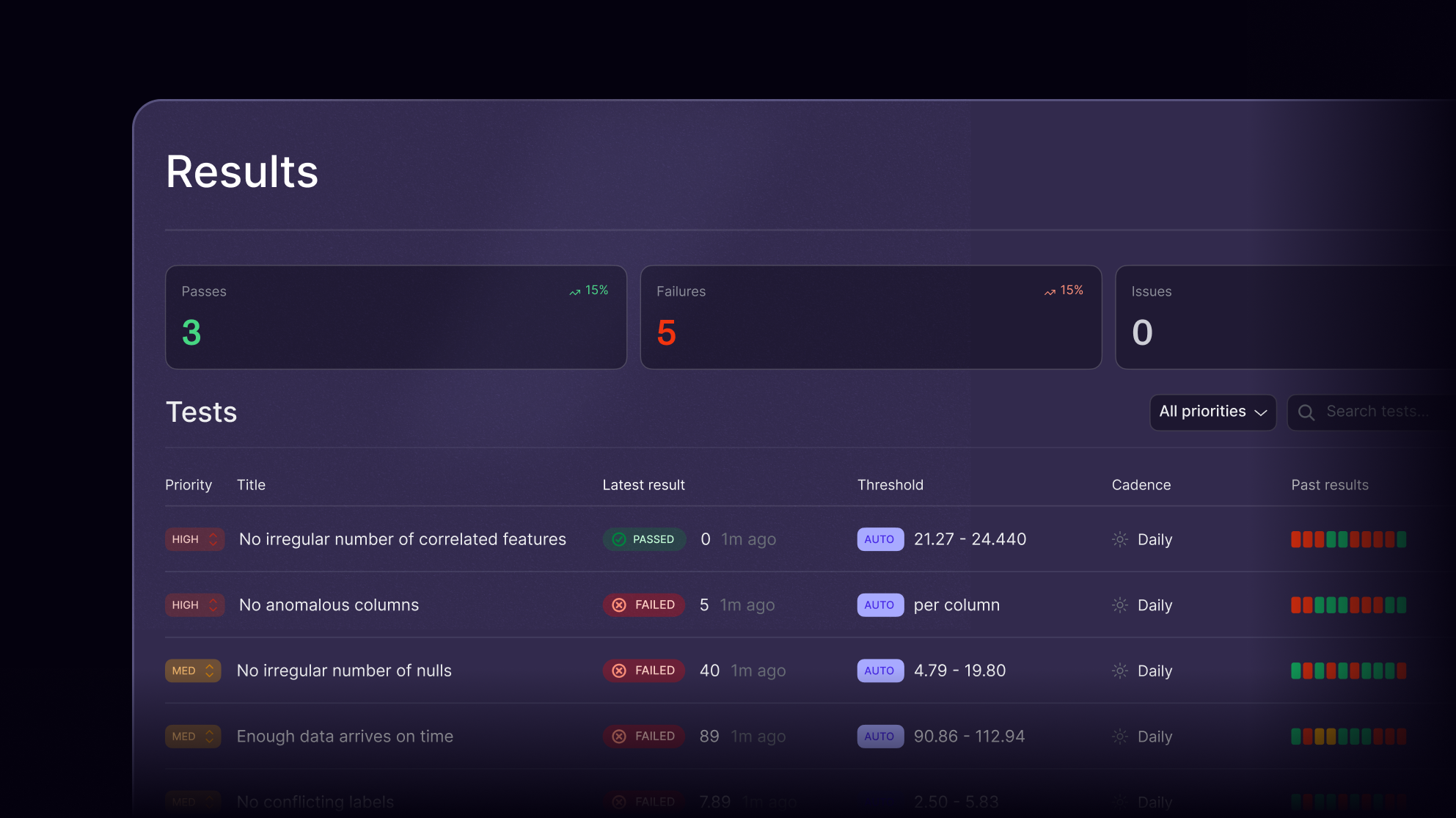

Evaluate your ML models with 100+ customizable tests, version comparisons, and automated CI/CD validation.

ML evaluation platform

Test every ML model. Catch every regression.

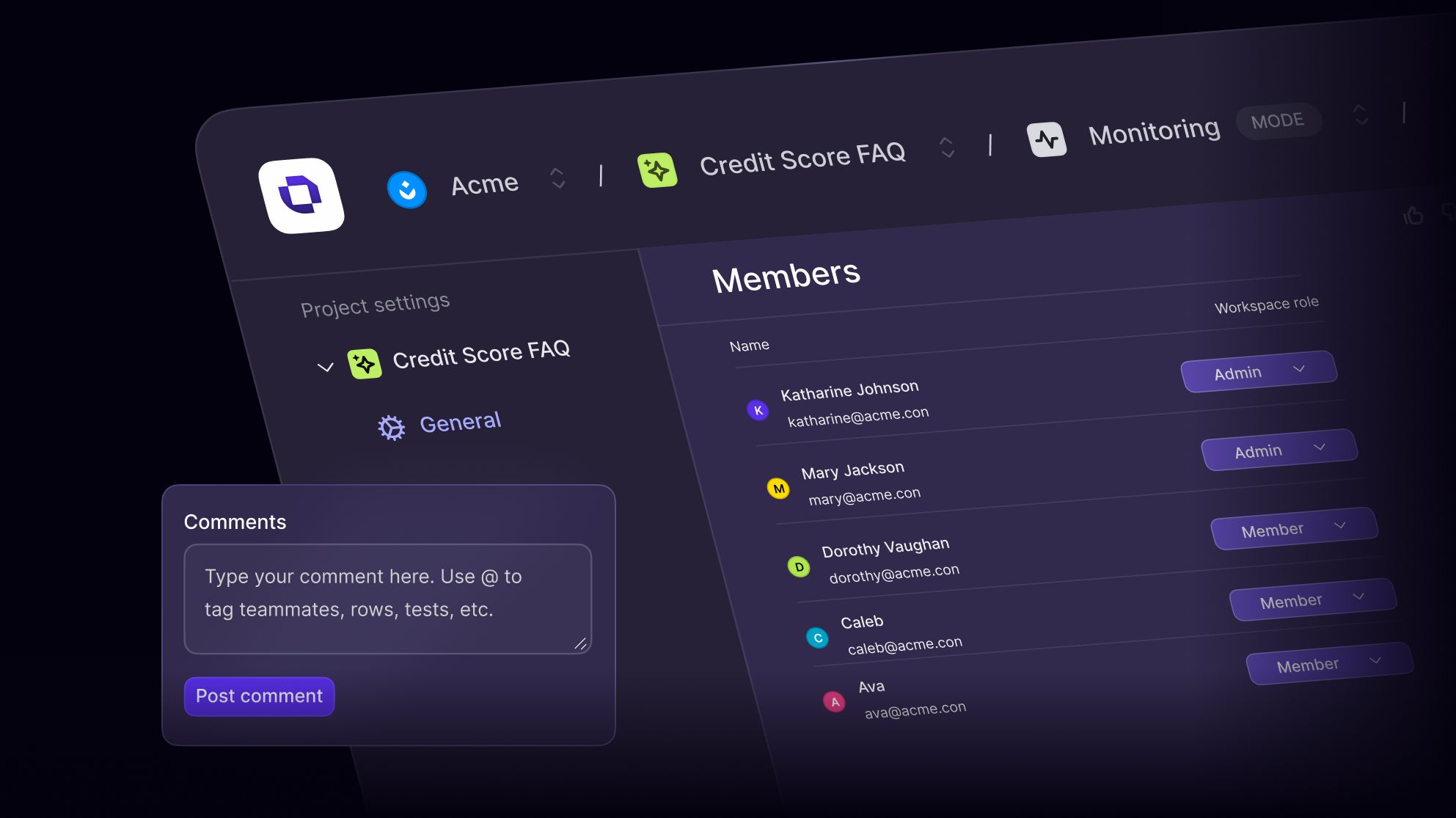

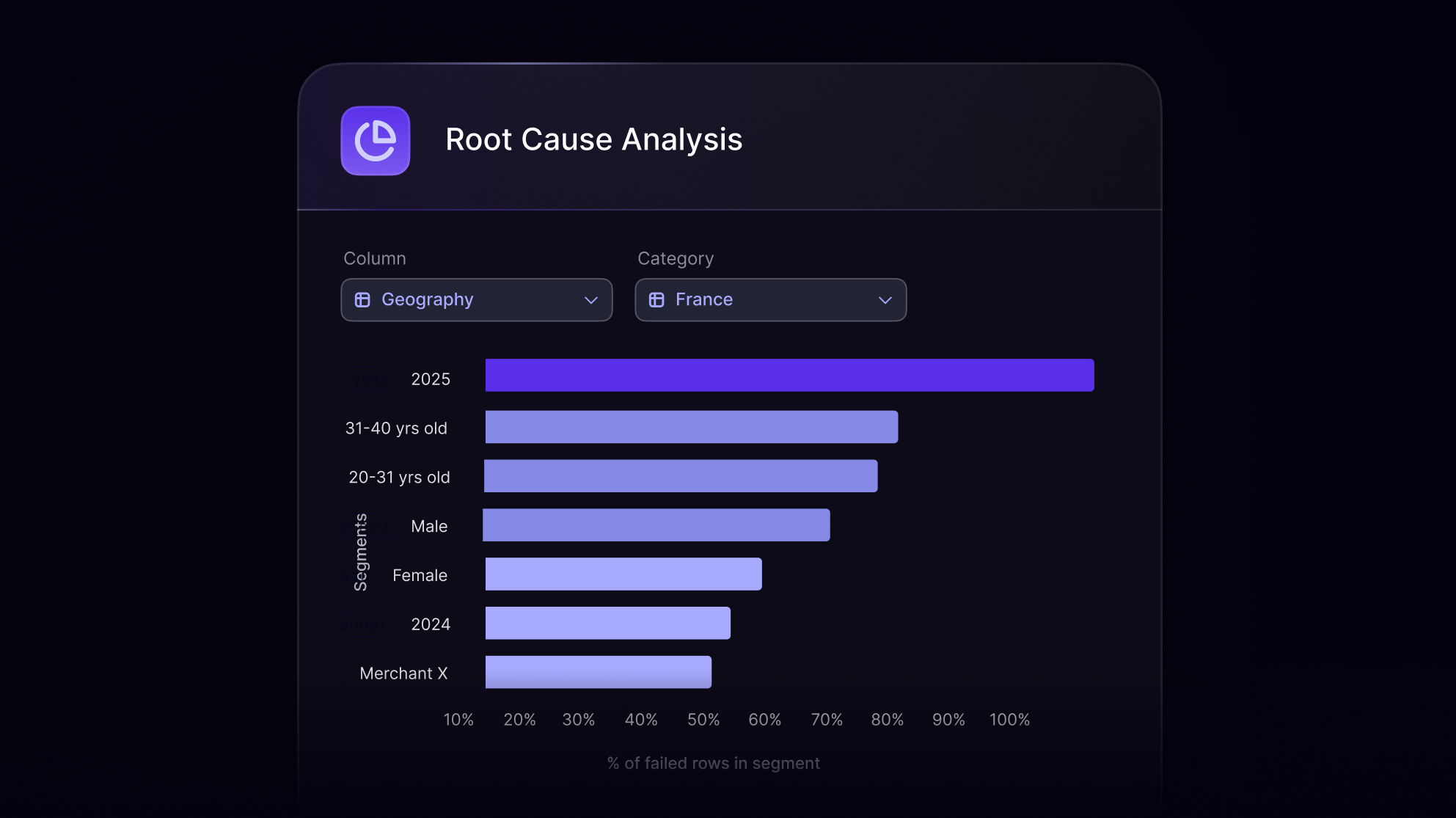

Core features

Explore the platform

Why it matters

Openlayer beats notebooks and dashboards

Integrations

Works with your stack, not against it

Openlayer fits into your workflow with minimal effort. Use our SDKs or CLI to trigger tests, integrate with GitHub Actions or GitLab CI, and connect to cloud storage like S3 or data warehouses like BigQuery. No vendor lock-in. No friction.

Customer

Trusted by enterprise AI teams

“Openlayer transformed our ML workflow. We now catch issues days earlier and have confidence in every deployment.”

ML Lead at Fintech Company

FAQs

Your questions, answered

$ openlayer push