Openlayer helps teams monitor large language model systems in real time—detecting failures, tracing outputs, and managing costs across applications.

LLM monitoring tools

LLM monitoring that goes beyond logs

Why monitoring LLMs is harder than it looks

Black-box models need white-glove monitoring

LLMs are non-deterministic, prompt-sensitive, and context-dependent. Without visibility into prompts, responses, and costs, teams are flying blind in production.

What to monitor in LLM systems

Stay ahead of hallucinations, delays & escalating costs

Openlayer's approach

Monitoring built for the nuances of LLMs

Monitor across chains, tools, and agent flows

Set thresholds for latency, cost, and output length

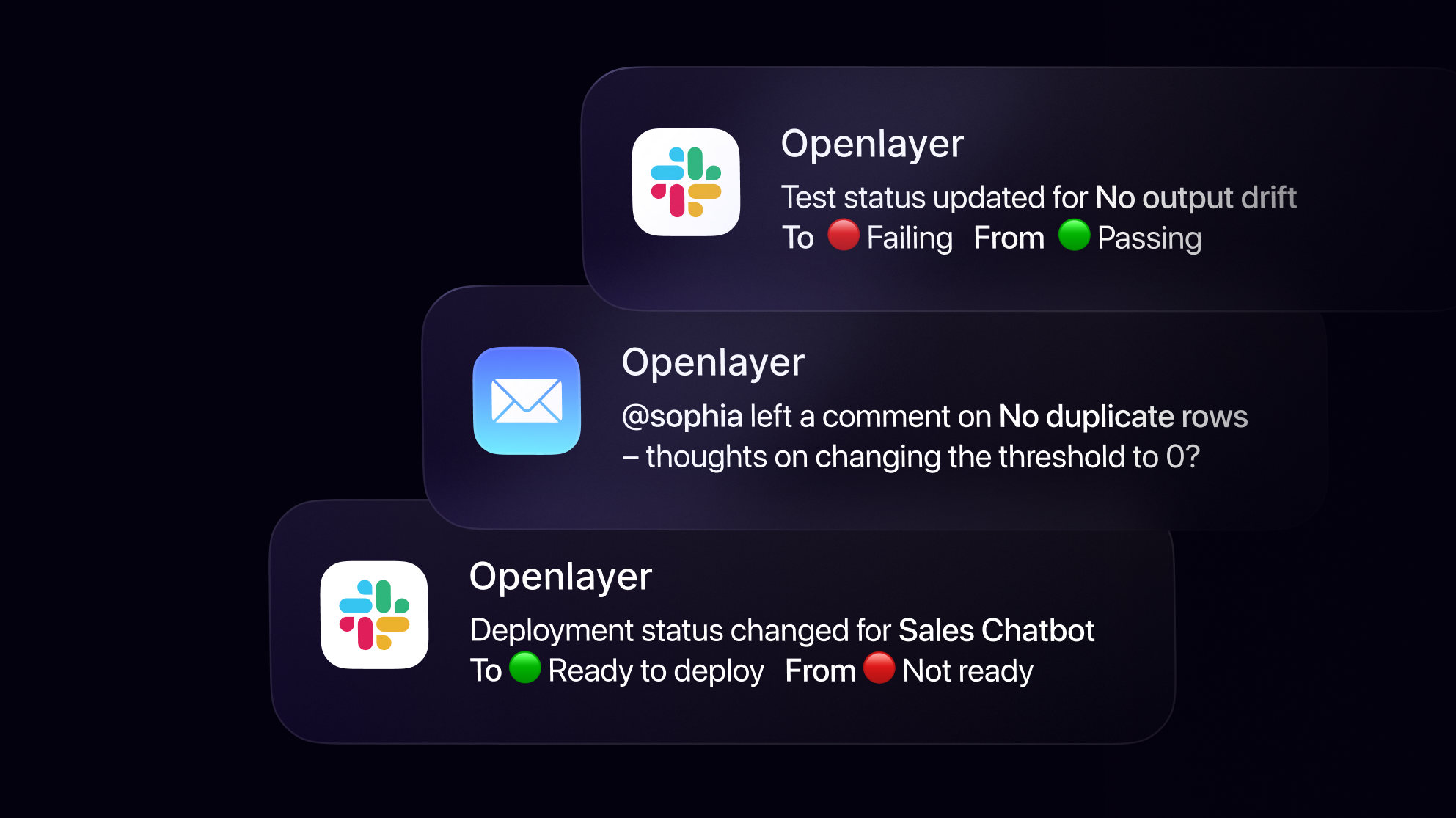

Alert on broken prompt chains or bad generations

View run history and compare performance over time

Works with OpenAI, Anthropic, open source models, and orchestrators like LangChain

FAQs

Your questions, answered

$ openlayer push