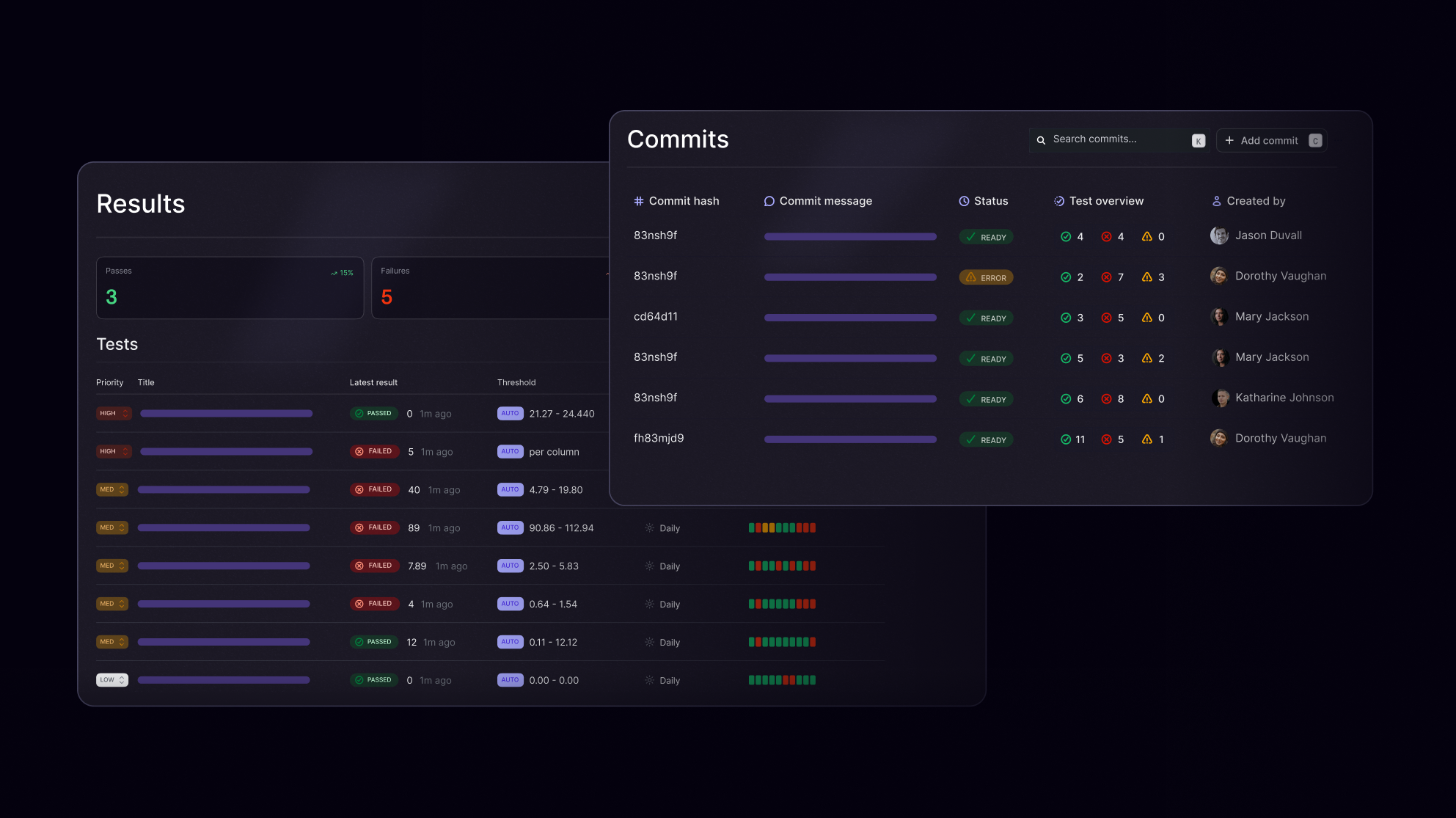

Openlayer gives GenAI teams visibility into their prompt iterations, model settings, and evaluation results—all in one place.

LLM experiment tracking

Track every LLM experiment. Understand what works.

Why LLM experiment tracking matters

Prompt engineering is still engineering

When it comes to GenAI, small prompt tweaks can have major consequences. Yet many teams still track experiments in spreadsheets or chats. That makes it hard to understand what worked and why.

What Openlayer tracks

Prompt-to-pipeline experiment history

Built for GenAI development

Track, evaluate, improve

Compare LLM runs side-by-side

Tag, organize, and annotate prompts

Evaluate outputs with rubrics or LLM-as-a-judge

Share results across teams and experiments

Works across OpenAI, Anthropic, Hugging Face, and LangChain ecosystems

FAQs

Your questions, answered

$ openlayer push