Go beyond accuracy. Openlayer helps teams test AI models against real-world scenarios, edge cases, and evolving data.

AI model evaluation platform

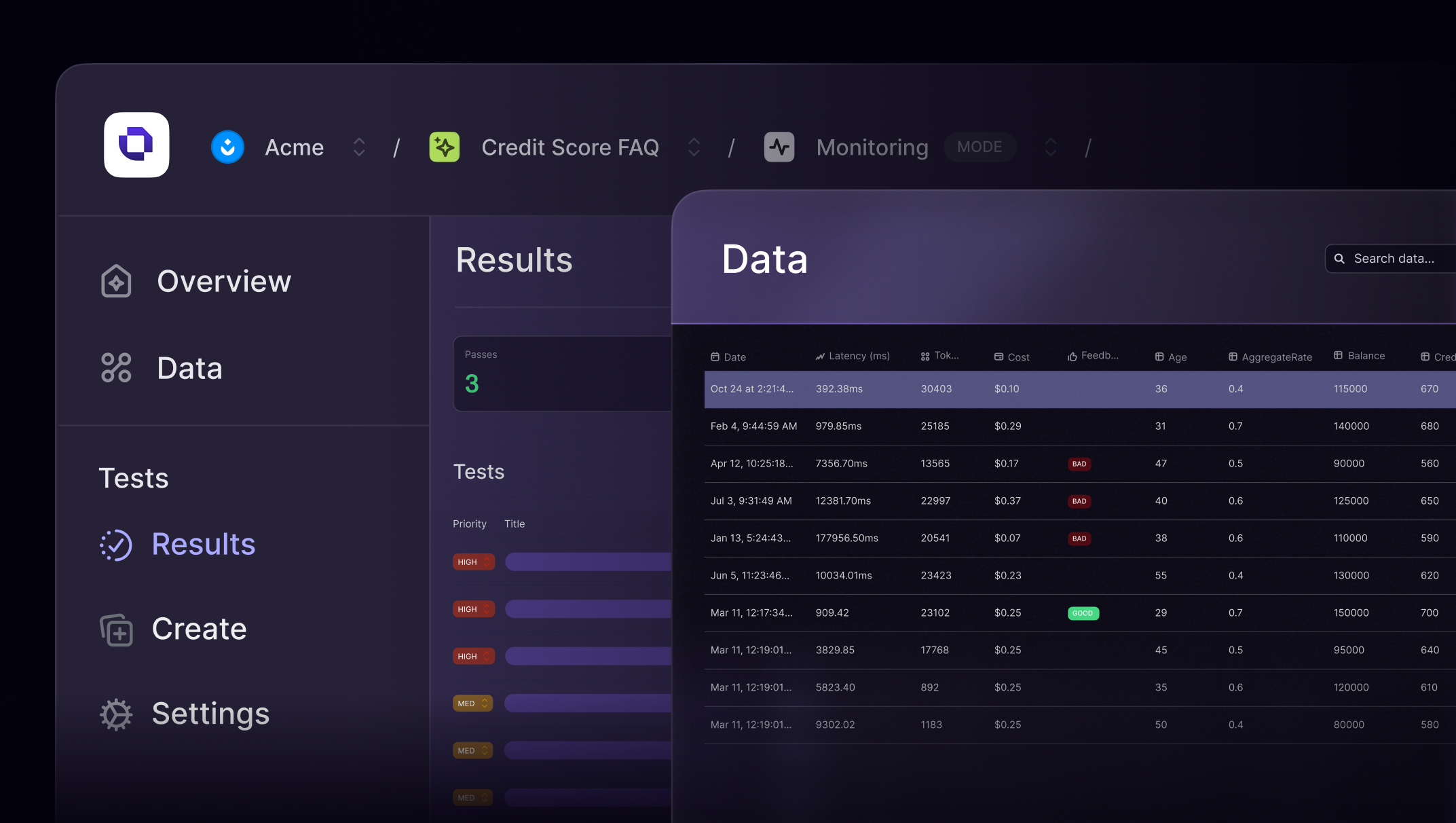

Evaluate AI models with precision and context

Why evaluation and validation are different

Accuracy is just the beginning

Most teams evaluate models by looking at a single metric. But that’s not enough. You need to understand why a model performs the way it does, where it fails, and whether it’s ready to deploy.

Evaluation + validation = trust

A framework for AI model confidence

Built for all AI systems

Evaluate any model—ML, LLM, or hybrid

Tabular and time-series models

Generative AI systems (LLMs, RAG, agents)

Multimodal AI (CV, NLP, structured)

Custom workflows via API, SDK, or CLI

FAQs

Your questions, answered

$ openlayer push